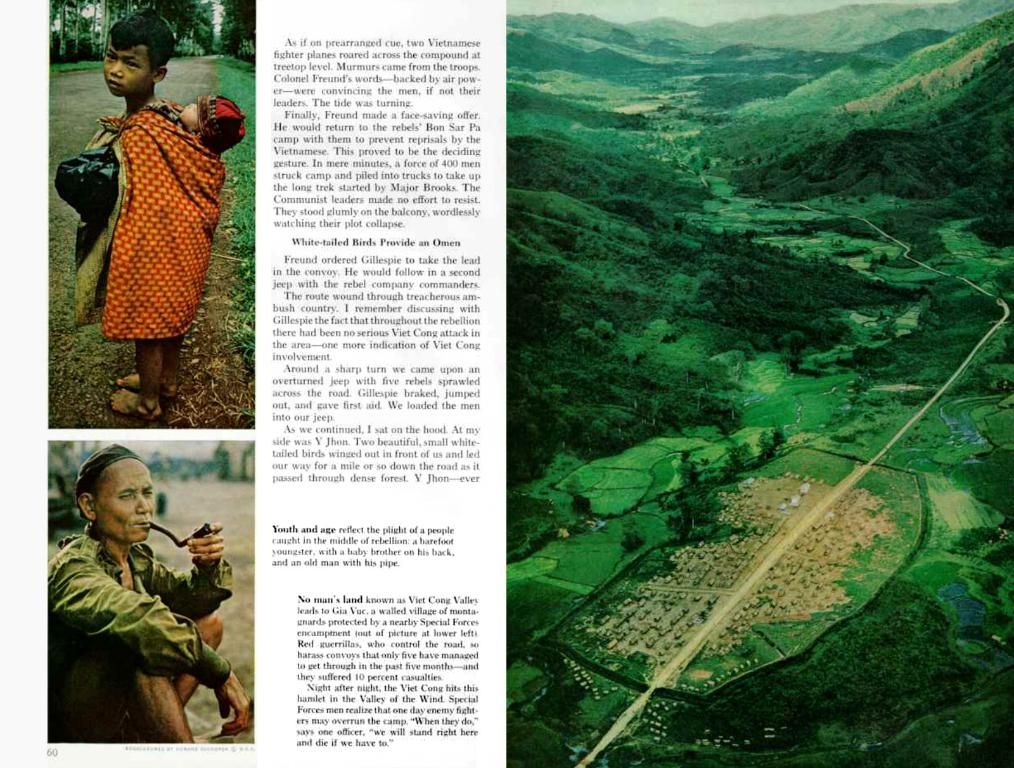

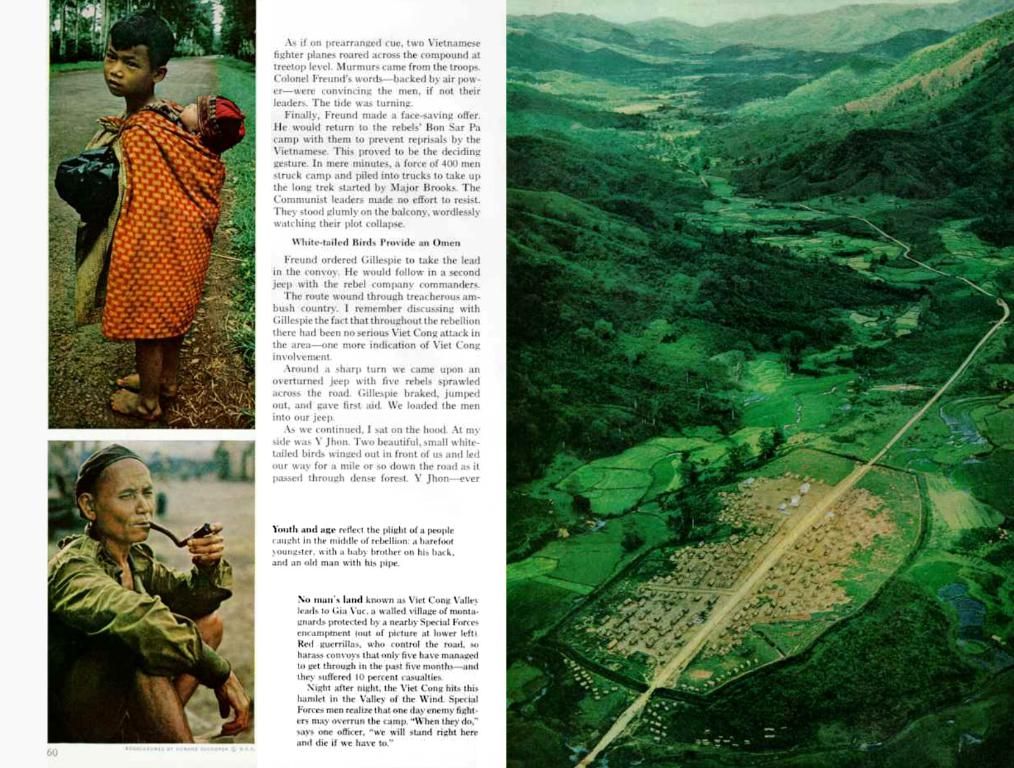

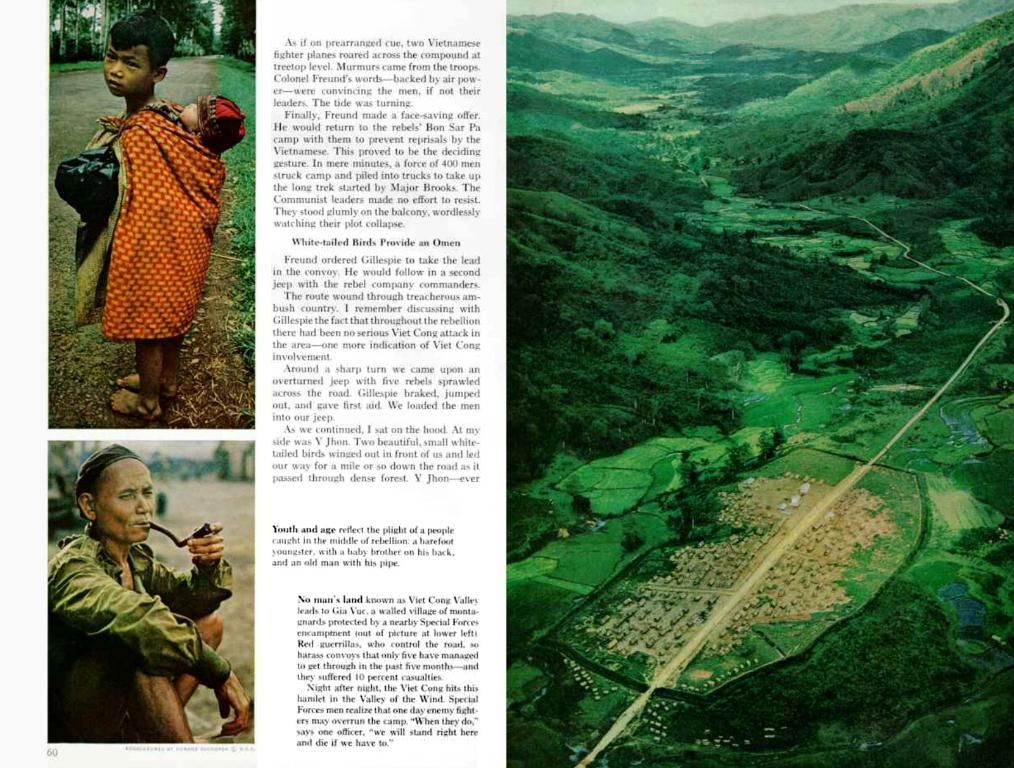

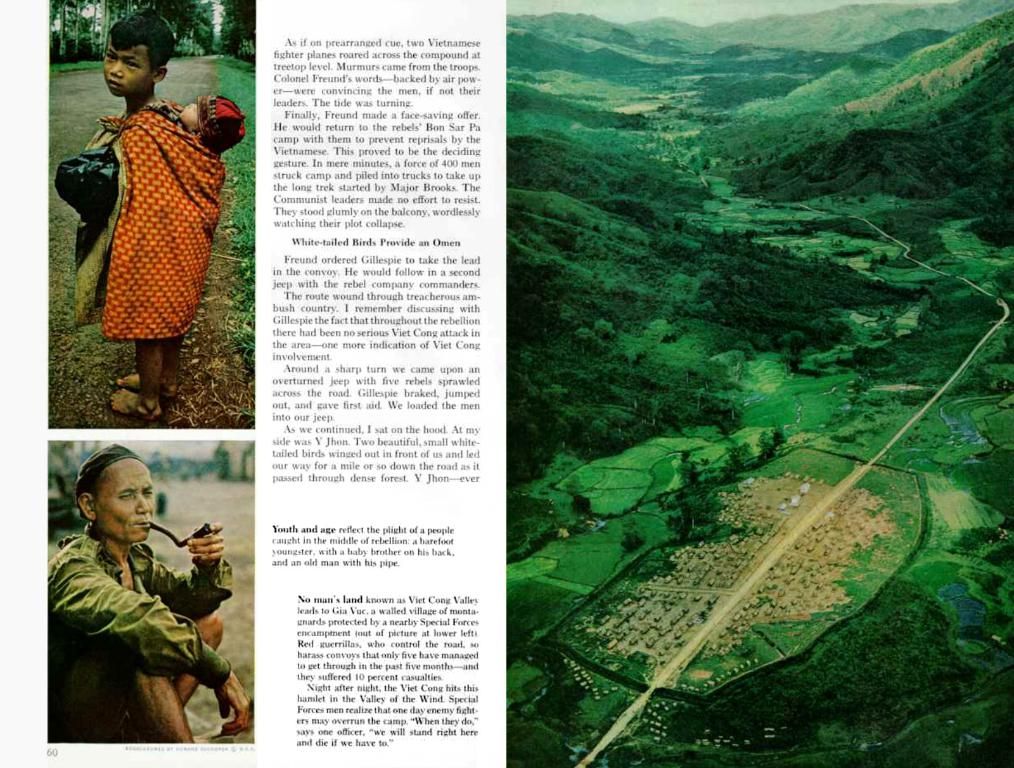

If AI Experiences Delusions, Avoid Attributing Fault to the AI Technology

Let's get real about AI hallucinations. This is a serious issue you've got to address when you're running a business with AI. The last thing you want is an AI that's spouting false information like it's going out of style! A properly utilized AI should not be a mindless conduit of nonsense; instead, it should be a well-oiled machine that cranks out accurate and meaningful insights.

So, stop playing the blame game with AI. If your AI is hallucinating left and right, maybe it's you who's not providing it with the right tools for the job.

When these genie-like AI tools start making stuff up, it's usually because they're lacking the specific data they need to create a solid, fact-based answer. Sometimes, they can't find what they're looking for, or they simply don't comprehend the question well enough to get the correct answer. It's not that they're being malicious; it's just that they don't know any better. And hey, that's what you're there for: to feed the beast with the right brain food!

New AI models like OpenAI's o3 and o4-mini are starting to hallucinate more often, getting a little too "creative" when they're stuck for the right answer. More powerful tools can create more hallucinations, but they can also deliver more valuable results if given the proper guidance.

Here's the deal: don't starve your AI of data. Treat your AI like you would a new team member: give it the tools it needs for success. Properly nourish it with relevant, high-quality data, and it'll thank you by spitting out information that makes sense and helps your business thrive.

But even then, remember to keep your wits about you. AI can be a fantastic asset, but it's not intended to replace human thinking. Keep asking questions, and verify the answers your AI gives you to make sure they're on point and backed by factual evidence.

Question your AI, and it will reward you with better insights.

So why do hallucinations happen?

There's no need to sit around scratching your head trying to figure out why AI hallucinates. Every large language model, save the occasionally annoying Clippy, is making informed guesses based on probability.

They take in billions of examples from their training data and use that information to create coherent sentences and paragraphs. With increased processing power and access to internet-scale data, these systems have become capable of carrying on full-blown chat conversations, as we saw with the introduction of ChatGPT.

But, as the AI naysayers like to point out, this doesn't make them truly intelligent beings. Instead, they're just expert imitators, regurgitating human intelligence that's been fed into them.

That might be true, but as long as the data is correct and the analysis is useful, who cares?

What happens if the AI doesn't have the data it needs? It fills in the blanks. Sometimes it's funny, and other times it's a total mess.

When building AI agents for business purposes, this is 10 times more of a risk. Agents are supposed to provide actionable insights, but they make decisions along the way, and those decisions build upon each other. If the foundation of the decision-making process is shaky, the output will be garbage, and that's not what you want.

But don’t fret, because our team tackled this issue head-on. We didn't just create a fancy robot; we made sure it runs on the right data:

- Double-check the data: Build the agent to ask the right questions and verify that it has the correct data before proceeding. It's better for the agent to acknowledge that it doesn't have the right data and halt the process rather than fabricating information.

- Implement a playbook: Define a semi-structured approach for your agent to follow. Important structure and context are crucial at the data-gathering and analysis stage. Let your agent loosen up and get creative when it has the facts in hand and is ready to synthesize the information.

- Create a high-quality data extraction tool: Make sure you're not just throwing an API call into the mix. Take the time to write custom code that gathers the right amount and variety of data, complete with quality checks along the way.

- Show the work: The agent should cite its sources and provide links to the original data so users can independently verify the information and explore it further. No magic tricks allowed!

- Build guardrails: Identify potential pitfalls and create safeguards against errors that simply cannot be allowed. In our case, this means that when the agent charged with analyzing a market is missing important data like our SimilarWeb data, it should not continue to generate information without that data.

We've integrated these principles into our recent release of three new agents, with many more on the way. For example, our AI Meeting Prep Agent for salespeople doesn't just ask for the name of the target company. Instead, it probes for the goal of the meeting and the identities of the individuals involved, preparing it to offer more insightful answers. It doesn't need to fabricate information because it utilizes a wealth of company data, digital data, and executive profiles to guide its recommendations.

Now, are our agents perfect? Not yet, and nobody is creating perfect AI just yet. But recognizing the problem and tackling it head-on is a hell of a lot better than sweeping it under the rug and pretending it doesn't exist.

Want fewer hallucinations? Give your AI a heaping plate of high-quality data.

If your AI is still hallucinating, maybe it's not the AI that needs fixing. Maybe it's your approach to taking advantage of these powerful new tools without investing the time and effort necessary to get them right.

AI hallucinations can be attributed to the lack of specific data necessary for creating a fact-based response. In the realm of business, relying on AI for decision-making without proper data can lead to incorrect or misleading results. To combat this, it's essential to double-check the data, implement a playbook, create a high-quality data extraction tool, cite sources, and build guardrails to ensure the AI produces accurate and actionable insights.